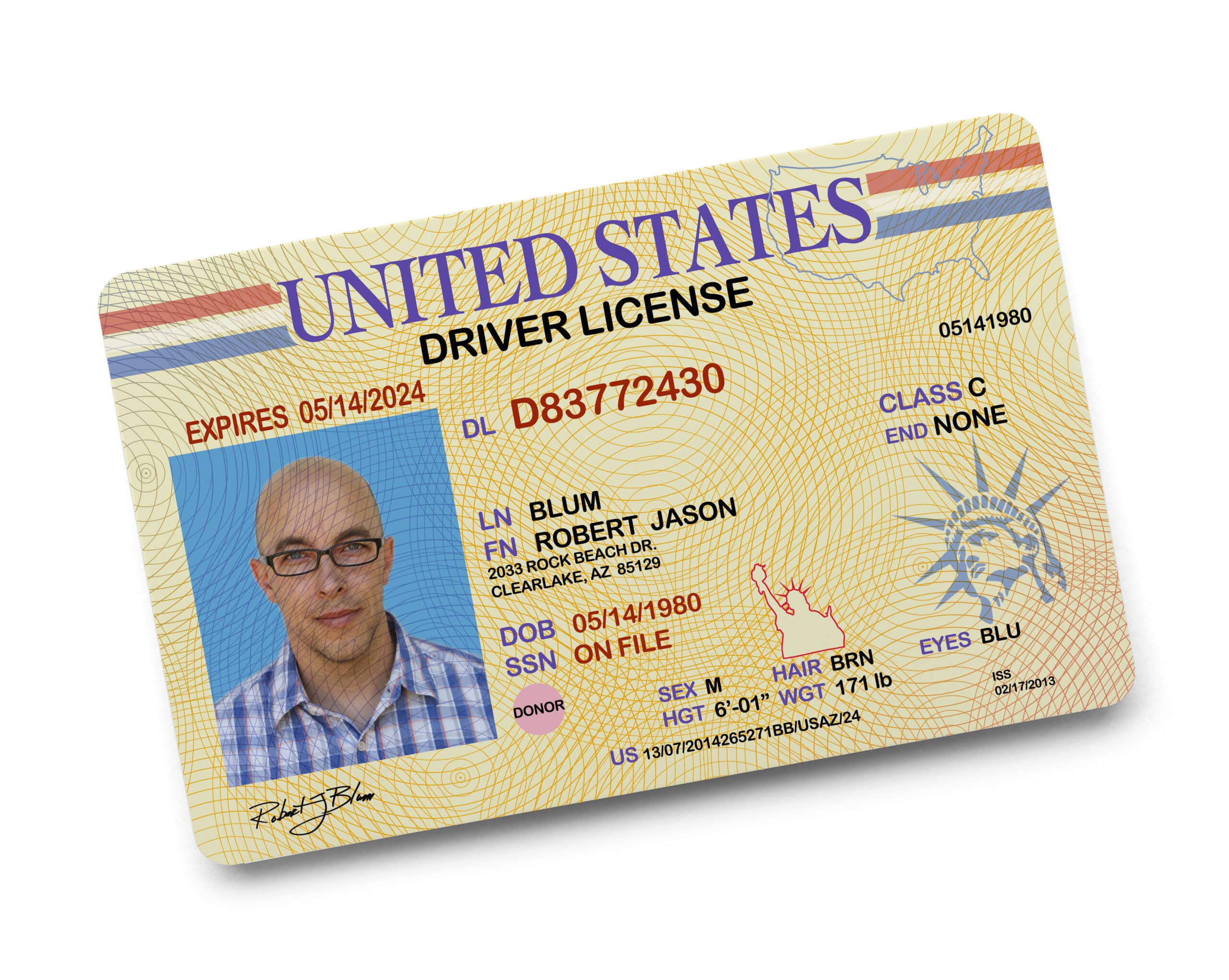

AI-Generated False IDs Are Being Used to Bypass Crypto Security Measures: Document

False IDs created by AI are being frail to bypass safety measures at cryptocurrency exchanges, in accordance to a picture from 404 Media.

OnlyFake, an underground websites that can pump out realistic-searching fake IDs, has drawn attention for its claims that it makes exercise of neural networks to make the IDs. This model may well beget significant ramifications for the cryptocurrency industry.

404 Media provided a faux ID on the positioning for $15 and frail it, as an illustration, to bypass identification controls at OKX, an exchange that has allegedly been frail in previous crimes.

OnlyFake’s proprietor, who goes by the pseudonym John Wick, additionally claimed in the picture that their IDs may very properly be frail to fool identification safety measures at exchanges corresponding to Binance, Kraken, and Coinbase.

Unchained reached out to every of these exchanges, besides OKX, for comment nonetheless didn’t suddenly acquire a response.

Fallacious IDs being frail to bypass safety measures on exchanges isn’t unusual. In actual fact, they beget got been circulated on the Sad Net since at least 2019. Nonetheless the benefit of exercise and diminished cost provided by AI can succor supercharge these efforts and can merely present a unusual wave of fraud that exchanges will wish to grapple with.

In a Telegram channel associated to OnlyFake, an admin wrote that the positioning modified into towards any unlawful exercise of photos their residing generated.

“We are towards fraud and harming folks,” the admin wrote in the channel. “All generated photos on the positioning are supposed for valid exercise fully. We undergo no accountability for how it’s doubtless you’ll additional exercise the photos from the generator.”

Source credit : unchainedcrypto.com